KDD 2022 1

Last week, I attended KDD 2022 in Washington DC, which was the first offline conference I have ever attended since WSDM 2020, almost two and half years ago in Houston. Although the COVID-19 pandemic still had a sizeable impact on the event as the majority of researchers and companies from China could not make it to the conference, participants demonstrated high enthusiasm and hoped all could return back to the prior norm before the pandemic.

Let me share a couple of thoughts regarding the conference.

First of all, Graph Neural Networks (GNN) is literally everywhere, in terms of applications in almost all domains, as well as how industry and academic both show tremendous interest in them, which has reminded me of how Graph Mining has gone viral during early years in the 2000s due to the rise of Social Networks and World Wide Web. Surprisingly, topics like PageRank, Community Detection, and Graph Laplacian have been rarely mentioned. Of course, this is not just for Graph Mining, other sub-fields such as Recommender Systems, Text Mining, and Information Retrieval have also been completely rewritten since the surge of Deep Learning.

Secondly, Causal Inference has become a much more mainstream topic than before where it is been utilized in many applications including Industry solutions. One thing worth mentioning is that many researchers still use Causal Inference as an intermediate step to further improve the prediction performance in some downstream tasks. However, it has been broadly ignored how Causal Inference could bring more insights to the problems at hand, for instance, few papers have talked about Causal Effect and its estimation. Moreover, the majority of papers in Causal Inference are essentially Observational Studies. But they do not offer discussions around data and assumptions necessary to have meaningful and robust causal estimation, which could be misleading and risky.

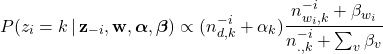

Thirdly, some research topics have declined dramatically. For instance, while Optimization once was a pretty hot topic given the popularity of large-scale machine learning, the need for understanding and improving it has been greatly reduced due to the rise of various of Deep Learning frameworks. Most researchers can reliably use these frameworks to achieve reasonable performance without understanding underlying optimization algorithms. In addition, it is surprising to observe that Probabilistic Modeling has been abandoned altogether. Now, few papers start with assumptions about how data is generated. Although it is arguably applicable to all scenarios, Probabilistic Modeling has its own advantages to describe certain data generation assumptions and help us understand certain problems better, which is quite different and possibly complementary from the computational point of view, which dominates the modeling language since the rise of Deep Learning.

Lastly, all Keynote Speakers delivered quite excellent talks, with their own colors. Lise Getoor from UC Santa Cruz is a prominent scholar from the last-round rise of Graph Mining. Some of the techniques like Collective Classification mentioned in the talk have been widely used and even included in some textbooks in the 2000s, which are completely ignored today. Her research is still around classical Graph Mining, especially in the domain of reasoning using graph structures. On the other hand, Milind Tambe from Harvard University has a surprisingly good talk. Rather than focusing on technologies and algorithms, Milind told stories about how to apply AI for social goods and how to build applications to impact real-world people’s life, which sent a strong message to the conference. The last speaker Shuang-Hua Teng from USC gave a rather theoretical talk, discussing how to balance the relationship between heuristics and theory. One thing quite interesting from the talk was that Shuang-Hua clearly categorized quite a number of widely used algorithms into heuristics, even though they might be considered theoretical-oriented algorithms by practitioners.

Compared to KDD 2019 that I attended in Alaska 3 years ago, this year’s KDD is different in the sense of the number of participants, the number of companies, and the diversity of research topics. It is quite obvious that we are around the corner of the last wave from Deep Learning. I’m looking forward to meeting with friends next time in LA.