Two important distributions related to Dirichlet distribution

In this post, we review two important facts of Dirichlet distribution. The basic setting used here as follows. Suppose we have a discrete space $latex \mathcal{X} = \{ \mathcal{X}_{1},\mathcal{X}_{2}, \ldots,\mathcal{X}_{K} \}$. These are $latex K$ outcomes can be observed from the “random experiments”. Suppose we have $latex N$ observations $latex \{X_{1}, X_{2},\ldots, X_{N}\}$ that are distributed according to a Multinomial distribution $latex \theta$ where $latex \theta_{i} = P(X_{j} = \mathcal{X}_{i})$. After placing a Dirichlet distribution $latex \mbox{Dir}(\alpha)$ on $latex \theta$, we are interested in the following two questions:

- What is the posterior distribution

?

? - What is the predictive distribution

?

?

Posterior Distribution

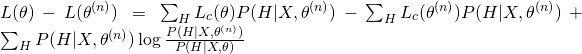

We can steadily derive the posterior distribution as below:

![]()

where $latex n(k)$ is the number of times outcome $latex \mathcal{X}_{k}$ appear in the data. Therefore, the posterior distribution is indeed a Dirichlet distribution $latex \mbox{Dir} \Bigr( \alpha_{1}+n(1),\alpha_{2}+n(2),\ldots,\alpha_{k}+n(k) \Bigl)$. If we only have one observation $latex X$, this posterior distribution is simply $latex \mbox{Dir} \Bigr( \alpha_{1}+\delta_{1}(X),\alpha_{2}+\delta_{2}(X),\ldots,\alpha_{k}+\delta_{k}(X) \Bigl)$ where $latex \delta_{k}(X)$ is $latex 1$ only if $latex X$ takes the value of outcome $latex \mathcal{X}_{k}$. This notation can also extend to the general case that the posterior distribution is $latex \mbox{Dir} \Bigr( \alpha_{1}+\sum_{i}^{N} \delta_{1}(X_{i}),\alpha_{2}+\sum_{i}^{N} \delta_{2}(X_{i}),\ldots,\alpha_{k}+\sum_{i}^{N} \delta_{k}(X_{i}) \Bigl)$.

Predictive Distribution

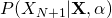

By using the posterior distribution derived above, we can have the predictive distribution as follows:

![]()

where the second last line is derived by observing that the posterior distribution is a Dirichlet distribution and the expression is essentially an expectation under that distribution. Note, the final line of the equation can be re-written into:

![]()

It is the weighted summation of prior mean of $latex \theta_{i}$ and the MLE of $latex \theta_{i}$. There is one extreme case for predictive distribution, which is that there is no data points before. By using the equation we derive above, we have:

![]()

These two distributions are heavily used in Dirichlet/Multinomial Bayesian modeling and also are milestones for understanding Dirichlet Process.