Unbiased Offline Evaluation Process of Contextual Multi-armed Bandit Models 2

In this post, I would like to discuss some strategies to evaluate contextual multi-armed bandit (cMAB) models in an unbiased offline evaluation setting. The setting is not only applicable for cMAB models but also can be served as a standard process for any machine learning models from online feedback data.

Review of Contextual Multi-armed Bandit Problems

A cMAB problem is a sequential decision making process. Bandit problems invovle making a decision in each round. Once a decision is made an observation is collected and the corresponding reward computed. The contextual-bandit formalism generalizes this classic setting by introducing contextual information in the interaction loop.

Formally, we define by ![]() , a set of actions, a contextual vector

, a set of actions, a contextual vector ![]() , a reward vector

, a reward vector ![]() , where each

, where each ![]() and a policy

and a policy ![]() . A contextual bandit describes a round-by-round interaction between a learner and the environment. At each round

. A contextual bandit describes a round-by-round interaction between a learner and the environment. At each round ![]() , the problem can be decomposed into following steps:

, the problem can be decomposed into following steps:

- The environment chooses

from some unknown distribution

from some unknown distribution  . Only

. Only  is revealed to the learner while the reward is not.

is revealed to the learner while the reward is not. - Upon observing

, the learner uses some policy

, the learner uses some policy  to choose an action

to choose an action  , and in return observes the corresponding reward

, and in return observes the corresponding reward  .

. - (Optional) The policy

is revised with the data collected for this round.

is revised with the data collected for this round.

Here, we define ![]() is parameterized by an unknown parameter

is parameterized by an unknown parameter ![]() . Ideally, we would like to choose the policy maximizing the expected reward:

. Ideally, we would like to choose the policy maximizing the expected reward:

![]()

An important fact in cMAB is that, only rewards of the chosen actions are observed. An online learning must therefore find a good balance between exploration and exploitation, a challenge in bandit problems. For offline policy evaluation, such partial observation raises even further difficulties. Data in a contextual bandit is often in the form of ![]() , where

, where ![]() is the action chosen for context

is the action chosen for context ![]() when collecting the data, and

when collecting the data, and ![]() is the corresponding reward. If this data is used to evaluate policy

is the corresponding reward. If this data is used to evaluate policy ![]() , which chooses a different action

, which chooses a different action ![]() , then we simply do not have the reward signal to evaluate the policy in that context.

, then we simply do not have the reward signal to evaluate the policy in that context.

Policy Evaluation

In order to evaluate different policies in an unbiased environment, we need to gather data using a logging policy. This policy is specially designed such that the data can be re-use for offline evaluation. As pointed out in [1], a randomized data collection, which has been known to be critical condition for drawing valid causal conclusions. From [1], the authors proposed the following data collection procedure. At each round,

- Environment chooses

, i.i.d. from some unknown distribution

, i.i.d. from some unknown distribution  , and only reveals context

, and only reveals context  .

. - Based on

, one compute a multinomial distribution

, one compute a multinomial distribution  over the actions

over the actions  . A random action

. A random action  is drawn according to the distribution, and the corresponding reward

is drawn according to the distribution, and the corresponding reward  and probability mass

and probability mass  are logged. Note that

are logged. Note that  may depend on

may depend on  .

.

A key point here is that, rather than choosing the optimal action at each round, we would like to choose a random action according some distribution over actions. Also, the probability to select such action should be recorded.

At the end of the process, we have a dataset ![]() containing data of the form

containing data of the form ![]() . In statistics, the probabilities

. In statistics, the probabilities ![]() are also known as propensity scores.

are also known as propensity scores.

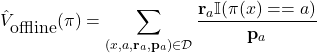

In order evaluate the value of a policy ![]() in such dataset, the following estimator is used:

in such dataset, the following estimator is used:

(1)

Unbiased Offline Evaluation

We define the value of a policy ![]() as:

as:

(2) ![Rendered by QuickLaTeX.com \begin{align*}V(\pi) &= \mathbb{E}_{(x,d) \in \mathbb{D}}\Bigr[ \mathbf{r}_{a} P(\pi(x) = a \, |\, x) \Bigl] \\&= \int_{(x,r) \in \mathbb{D}} \mathbf{r}_{a} P(\pi(x) = a \, |\, x) P(x,r) \, dx dr\end{align*}](https://www.hongliangjie.com/wp-content/ql-cache/quicklatex.com-24c691ae79c28b79d4849f52d3c6398f_l3.png)

(3) ![Rendered by QuickLaTeX.com \begin{align*}V(\pi) &= \mathbb{E}_{(x,d) \in \mathbb{D}}\Bigr[ \mathbf{r}_{\pi_{x}} \Bigl] \\&= \int_{(x,r) \in \mathbb{D}} \mathbf{r}_{\pi_{x}} P(x,r) \, dx dr\end{align*}](https://www.hongliangjie.com/wp-content/ql-cache/quicklatex.com-3b46a2b2c0fe43746a4bf359cd4a13e9_l3.png)

(4) ![]()

(5) ![Rendered by QuickLaTeX.com \begin{align*}\int_{\mathcal{D} \in \mathbb{D}} \Bigr[ \sum_{(x,a,\mathbf{r}_{a},\mathbf{p}_{a}) \in \mathcal{D}} \frac{\mathbf{r}_{a} \mathbb{I}(\pi(x) == a)}{\mathbf{p}_{a}} \Bigl] P(\mathcal{D}) \, d\mathcal{D}\end{align*}](https://www.hongliangjie.com/wp-content/ql-cache/quicklatex.com-bc37efb6428a011251b2bdde73e6fb6c_l3.png)

(6) ![]()

(7) ![Rendered by QuickLaTeX.com \begin{align*}\int_{\mathcal{D} \in \mathbb{D}} \Bigr[ \sum_{(x,a,\mathbf{r}_{a},\mathbf{p}_{a}) \in \mathcal{D}} \frac{\mathbf{r}_{a} \mathbb{I}(\pi(x) == a)}{\mathbf{p}_{a}} \Bigl] P(\mathcal{D}) \, d\mathcal{D} = \int_{\mathcal{D} \in \mathbb{D}} \Bigr[ \sum_{(x, \mathbf{r}) \in \mathcal{D}} \mathbf{r}_{\pi(x)} \Bigl] P(\mathcal{D}) \, d\mathcal{D}\end{align*}](https://www.hongliangjie.com/wp-content/ql-cache/quicklatex.com-d5541922f39b569f4707632a34ca1b98_l3.png)

References

- L. Li, S. Chen, J. Kleban, and A. Gupta. Counterfactual estimation and optimization of click metrics for search engines. Technical Report MSR-TR-2014-32, Microsoft Research, 2014.

- L. Li, W. Chu, J. Langford, and X. Wang. Unbiased offline evaluation of contextual-bandit-based news article recommendation algorithms. In Proceedings of the Fourth ACM International Conference on Web Search and Data Mining, WSDM ’11, pages 297–306, New York, NY, USA, 2011. ACM.

- A. L. Strehl, J. Langford, L. Li, and S. Kakade. Learning from logged implicit exploration data. In NIPS, pages 2217–2225, 2010.